Beyond the Bug Hunt: Transforming Manual Testing from a Liability into a Strategic Asset

In the relentless pursuit of speed and innovation, software development organizations have come to view manual testing as a necessary stage—if burdensome—in the software development lifecycle (SDLC). It is often treated as a simple cost center, a final hurdle to clear before release.

This perspective obscures a dangerous reality!

For most organizations, the conventional approach to Quality Assurance (QA) is not merely a line item on a budget: it is an accumulating liability, often invisible.

This is QA Debt: a form of operational debt that silently compounds, eroding product quality, slowing velocity, and inflating costs over time.

QA should be a profit center, not a cost center!

Hidden liabilities are not a new idea. It mirrors the challenge many organizations face with 24/7 operations, where the true costs are often obscured.

As detailed in a report by the Consortium for Information & Software Quality,1 poor software quality cost U.S. companies an estimated $2.41 trillion in 2022. Even a single critical defect can be catastrophic: 91% of large enterprises report that an hour of downtime costs over $300,000.

In an era where one bad software experience can drive over half of customers away,2 businesses can no longer afford to treat QA as an afterthought or a checkbox.

This article:

- Deconstructs the true, fully-loaded costs and pitfalls of today’s QA and testing models, from in-house manual testing to outsourced QA and premature test automation.

- Uncovers a major missed opportunity around deriving high-quality user and internal documentation from manual testing.

- Reveals why a strategic alternative is not just cheaper, but essential for any organization serious about catching every corner case before customers do.

Benchmark: The True Cost of In-House QA

To understand the economics of QA, we must first establish a baseline: the fully-loaded cost of a U.S.-based QA professional working in-house.

An average QA Engineer in the U.S. earns around $100,000 per year,3 but salary is just part of the picture. Benefits, taxes, and overhead typically add 25–40% on top of base pay.4

In other words, the true cost of employing a full-time QA tester easily climbs to $120,000–$140,000 annually for a mid-level role.

What does this investment buy you?

In theory, a skilled in-house QA team working normal hours should deliver thorough testing with minimal defects escaping to production. When fully resourced, an internal QA can maintain high standards and catch issues early, preventing the exponentially higher costs of fixes later in the lifecycle (fixing a bug during testing is ~15x cheaper than in production).5

Thorough early testing pays off, and this is the gold-standard scenario against which other models should be measured.

The Hidden Costs of Local Manual Testing

In reality, few organizations can afford an army of local QA engineers to test every release meticulously. Those that do often face diminishing returns and unexpected human-factor costs.

The single greatest unmanaged risk in any manual testing process is the human element itself.

Repetition Fatigue & Productivity Loss

Manual testing is labor-intensive and monotonous.

Running the same test scripts release after release can dull even the best testers’ concentration, just as night-shift work erodes productivity by 7.7% due to fatigue.6 Repetitive manual work can lead to oversight and error.

A bored or fatigued tester is more likely to miss a critical edge case, undermining the very quality mandate they were hired to uphold.

Turnover and Talent Drain

Highly skilled QA professionals often view repetitive manual testing as a stepping stone, not a career destination.

Teams that rely on brute-force manual regression can see elevated attrition as testers seek more engaging roles. The cost to replace a technical employee can be 50–125% of their annual salary when you factor in recruiting and training.7

High turnover means new testers constantly climbing the learning curve of your product—a recipe for more mistakes and missed bugs.

Tester Fatigue and the Fatigue Tax

The single greatest unmanaged risk in any manual testing process is the human element itself. This is not an indictment of the skill or dedication of individual testers: it’s an acknowledgment that most QA operating models place talented professionals in a system that guarantees diminished returns.

Manual software testing involves highly repetitive tasks, immense pressure from deadlines, and significant mental overload. This combination inevitably leads to tester fatigue: a gradual decline in a tester’s focus, speed, and accuracy that directly degrades the quality of their work.6

Tester fatigue has a direct and quantifiable impact on software quality.

Tester fatigue is more than simple weariness: it is a primary driver of human error, leading to missed defects, invalid data entry, and assumption errors where testers’ past experiences cause them to overlook new, unexpected behaviors.7

Research confirms that high levels of stress, a constant companion to deadline pressure and overtime, have a direct negative correlation with software quality, resulting in a higher number of defects escaping into later development stages or, worse, into production.8

The consequences of this systemic fatigue are severe and costly. It is a leading cause of tester burnout and high turnover rates, which can cost an organization up to 30% of a position’s annual salary in recruitment and training for a replacement.9 This creates a vicious cycle of hiring, onboarding, burning out, and replacing talent, which constantly drains team morale, institutional knowledge, and productivity.

This cycle imposes a Fatigue Tax on the organization. This is not a one-time cost but a compounding liability paid with every release cycle. The process begins with the observation that the very structure of most QA departments—placing testers at the end of the development cycle where pressure is highest and tasks are most repetitive—is perfectly designed to produce fatigue.

This fatigue, in turn, is a known cause of human error and missed defects.10 A bug missed by a fatigued tester does not simply vanish; it is discovered later in the cycle by another developer, during user acceptance testing, or by a customer. At this point, the cost to remediate the bug is exponentially higher than if it had been caught at its source. Research has shown that “finding and fixing bugs is the most expensive cost element for large systems.”11

This reframes the entire problem. The issue is not that individual testers are making mistakes, but that the organization’s operating model is systematically manufacturing those mistakes. The focus must shift from personnel to process.

Limited Capacity Results in Inadequate Coverage

Budget constraints often limit the number of testers you can hire, which directly results in insufficient coverage of the product.

Understaffed QA teams have to cut corners, testing only critical paths and happy flows. This leaves countless corner cases untested until they blow up in production, causing emergency hotfixes and customer pain.

Skimping on QA might save money up front, but it virtually guarantees higher costs later when undetected defects surface.8

The Coverage Mirage: A Dangerous Illusion of Safety

Organizations operating under the traditional QA model often see the presence of a testing team as evidence of adequate quality test coverage.

This is flat-out wrong! In these organizations, test coverage is a mirage: a vanity metric that communicates no value but provides a dangerous illusion of safety.

The Coverage Mirage is not merely a QA problem; it is a fundamental threat to business continuity.

Achieving and maintaining high-quality, comprehensive test coverage is a complex and persistent challenge, especially in modern Agile and DevOps environments with rapid release cycles.12 There is far more to it than can be captured in a single metric.

Under constant pressure to meet deadlines, testers are forced to prioritize their efforts. Without a rigorous, risk-based strategy, this prioritization often devolves into an ad-hoc process that results in rushed testing and significant, unknown gaps in coverage.13 While the theoretical ideal of 100% test coverage is a myth, the practical goal of risk-based coverage—focusing effort on the most critical and high-risk areas of an application—is equally difficult to achieve.

Getting there takes deep system knowledge, a structured approach, and freedom from the very time pressures that define the traditional model.14 The administrative work essential for effective coverage, such as creating and maintaining a requirements traceability matrix, is often the first casualty when teams are pressured to “just test.”15

This systemic failure to achieve meaningful coverage creates a hidden risk portfolio within the organization. When a fatigued tester (suffering from the “Fatigue Tax”) is forced to make rapid prioritization decisions, they will inevitably create gaps. They will follow the path of least resistance, repeatedly testing the same well-understood “happy paths” while complex, unfamiliar, and often more critical edge cases go unexamined.

This leads to a profound disconnect between perceived and actual risk.

Leadership reviews a “Test Pass” report from the QA team and assumes the product is stable and ready for release. In reality, the most complex and failure-prone components of the system may not have been tested at all!

This hidden risk portfolio is a ticking time bomb, making all future development more dangerous and costly, as new features are built upon a foundation that is believed to be stable but is actually riddled with latent defects.

The Coverage Mirage is not merely a QA problem; it is a fundamental threat to business continuity.

The Automation Paradox: Why Your Biggest Investment Is Set Up to Fail

Faced with the mounting costs of the Fatigue Tax and the unnerving uncertainty of the Coverage Mirage, many leaders turn to a seemingly obvious solution: We’ll just automate our way out of this problem.

This impulse is understandable, but it is also the final act in the creation of a massive liability.

Without the right foundation, test automation is not a solution; it is an accelerant for failure.

Without the right foundation, test automation is not a solution; it is an accelerant for failure. This is the Automation Paradox: the very tool meant to solve the problems of manual testing often ends up magnifying them.

Test automation requires a significant upfront investment in tools, training, and script development, which is substantially higher than that of manual testing.16 A positive return on this investment is only possible if the ongoing cost of executing and maintaining an automated test campaign is low and the number of times the campaign is run is high enough to cross a break-even point.17

However, attempting to build an automation suite on top of an unstable foundation is a recipe for failure. Automating features that are poorly understood or subject to frequent change results in brittle, high-maintenance scripts that constantly break, requiring more effort to fix than they save.18

The most common and costly mistake is attempting to automate everything. By contrast, a successful automation strategy is highly selective, targeting stable, high-value, repetitive regression tests while leaving new, volatile, or exploratory testing to manual effort.19

Critically, automated tests are only as good as the manual understanding that informs them; automating a flawed or incomplete manual process simply codifies those flaws and executes them with greater speed and frequency.20

When an organization suffering from the Fatigue Tax and the Coverage Mirage attempts to implement automation, it is building on sand. The team will inevitably automate the wrong things (low-value tests that are easy to script) or automate the right things incorrectly (based on a flawed or incomplete understanding of the feature’s behavior).

Far from reducing QA Debt, this dynamic converts it into a more toxic, high-interest form.

The development team’s focus shifts from testing the application to maintaining a broken and unreliable automation suite. The large initial investment is wasted, the promised ROI never materializes, and the entire organization becomes disillusioned with automation as a concept, poisoning the well for any future, better-conceived initiatives.

This paradox reveals a critical, unbreakable dependency chain: sustainable automation requires a robust manual blueprint, which in turn requires a fatigue-free, high-coverage testing process. By attempting to jump to the end of this chain, organizations guarantee the collapse of the entire QA system.

The Documentation Deficit: Hidden Liability & Sleeping Opportunity

Compounding the challenges of fatigue, incomplete coverage, and misguided automation is a pervasive liability: the documentation deficit.

Inadequate documentation—both user-facing and internal—is a widespread form of technical debt that ambushes teams in the SDLC, inflating costs, hampering productivity, and perpetuating knowledge silos.

Poor documentation is a silent killer, eroding team velocity and escalating long-term expenses.

User documentation, such as guides, tutorials, and help files, is essential for customer success, yet often neglected or outdated. Studies show that inadequate user documentation leads to increased development time by up to 18%, higher support costs, and decreased productivity.21 It frustrates users, driving up support tickets and churn, with potential costs in the millions for lost efficiency and rework.22 Poor onboarding due to subpar docs is a leading cause of customer churn, as first impressions matter and bad experiences drive users away.23

Internal documentation—like architecture overviews and process guides—is equally critical but frequently insufficient.

Maintenance consumes 60-80% of software lifecycle costs, and poor internal documentation significantly amplifies this.22 Organizations with subpar documentation see a 37% increase in ticket resolution time,22 leading to frustrated developers spending hours deciphering code instead of innovating. This deficit breeds confusion and silos, with different teams holding varying interpretations of systems.24 Outdated or incomplete docs create a false sense of security, misleading teams into believing systems are well-understood when they are not, exacerbating technical debt and slowing development as manual checks become necessary.25

The frequency of this issue is alarming: many software companies prioritize code over documentation, resulting in chronic gaps that stall product adoption, waste developer time on troubleshooting, and affect team morale and velocity.26

Despite its cost, the documentation deficit also conceals a sleeping opportunity. Standalone user and internal documentation is often orphaned and falls behind production changes, becoming unreliable. By contrast, manual test scripts are maintained frequently to stay in sync with the code, making them a current and reliable source of process information.

When properly leveraged, these detailed test scripts can be transformed into high-quality user and internal documentation, closing these gaps and turning QA into a source of additional value.

The Unseen Cost: Bug Escapes & Brand Damage

Beyond the direct costs of salaries and staff, insufficient testing carries an insidious price in defects that reach customers. Every bug in production is a tiny customer experience (CX) time-bomb. Studies show over half of consumers will cut spending with a company after a single bad experience.2

Every production bug is a tiny CX time-bomb.

Each missed defect risks not only a support incident, but potentially lost customers, negative reviews, and reputational harm that no bug-fix patch can fully undo.

Consider that acquiring a new customer is 4-5x more expensive than retaining an existing one.5 A serious bug can instantly negate years of customer goodwill. In sectors like finance or healthcare, a defect isn’t just embarrassing—it could violate compliance or safety, carrying legal penalties on top of business losses.

The documentation deficit amplifies these risks: without clear user docs, customers struggle even with bug-free software, leading to frustration and churn. Internally, poor docs prolong bug fixes, escalating downtime costs.

These downstream expenses rarely show up on an operating budget, but they are very real. Poor software quality can grind operations to a halt and leave a lasting stain on a company’s reputation.1

In short, every dollar “saved” by under-testing may cost hundreds more in emergency fixes, downtime, lost business, and documentation-related inefficiencies.

Outsourced QA: A False Economy

To escape the high cost of local QA, many companies turn to offshore or third-party QA vendors.

On paper, offshoring testing to a low-wage region or contracting a specialized QA firm promises massive savings. You pay a fraction of local salaries and let someone else handle the grunt work.

The third-party QA vendor model is a primary driver of the CX gap. It is structurally incapable of delivering the quality it promises.

This is almost always a false economy.

A closer look reveals that outsourced QA arrangements suffer from the same pitfalls as traditional IT offshoring… plus a few unique to the testing process.

Moreover, by separating testing from your core team, vendors miss the testing-to-documentation connection, forgoing the chance to generate valuable user and internal docs from test scripts—an expensive opportunity cost that perpetuates knowledge gaps and inflates long-term expenses.

The Flawed “Vendor Team” Model

Traditional QA outsourcing typically means handing over a chunk of your testing effort to a separate team at a vendor, often overseas. It’s their team, their tools, their rules, operating in parallel with your development organization.

This model has fundamental flaws that chip away at the apparent cost savings:

-

Integration Tax (15%+ Overhead): Because the vendor team is external, significant effort goes into managing the interface between their work and yours. Requirements handoffs, separate bug reporting systems, management status meetings: all incur overhead. Industry analysis finds that the project management layer needed to coordinate adds 15-20% to the total project cost.27

-

Communication & Coordination Friction (10% Productivity Loss): Even with the extra layer of management—if not because of it—the model is inefficient. Asynchronous communication, cultural gaps, and rework due to misunderstandings are inevitable. This friction imposes a productivity penalty conservatively estimated at 10%.28

-

The Cost of Poor Quality (15% Productivity Loss): The low-cost model is built on inexperienced staff and high attrition (30-50%).29 This guarantees a state of chronic inexperience, leading to more errors and rework. This “Cost of Poor Quality” (COPQ) can be modeled as an additional 15% productivity loss.30

Combined, these factors create a total productivity loss of 25%, a penalty that consumes a massive portion of the initial labor arbitrage savings. This model is a primary driver of the CX gap, as it is structurally incapable of delivering the quality it promises.

The Dependency Trap: Failing to Build Internal QA Muscle

Beyond the immediate operational flaws, traditional QA outsourcing carries a profound long-term risk: vendor dependency.31

This dependency manifests in several ways. First, reliance on a single vendor can lead to vulnerabilities if the provider encounters operational challenges, financial instability, or resource constraints, directly impacting your project’s success.32, 33 Switching vendors becomes expensive and technically challenging, often involving knowledge transfer gaps and unforeseen costs.34

Moreover, outsourcing stifles internal growth. When QA is treated as a “black box” service, your team misses out on building expertise, refining processes, and accumulating institutional knowledge.31 This creates knowledge silos and gaps, as internal teams lack the hands-on experience needed to innovate or adapt QA strategies to evolving business needs.35 Over time, this erodes your organization’s ability to maintain high standards independently, turning QA into a perpetual expense rather than a strategic asset.

The opportunity cost is immense: instead of cultivating a resilient, in-house capability that compounds value over time, you’re locked into ongoing vendor fees and reduced flexibility.35 As we’ll explore later, JGS counters this trap by embedding talent into your workflows, ensuring you build muscle rather than dependency.

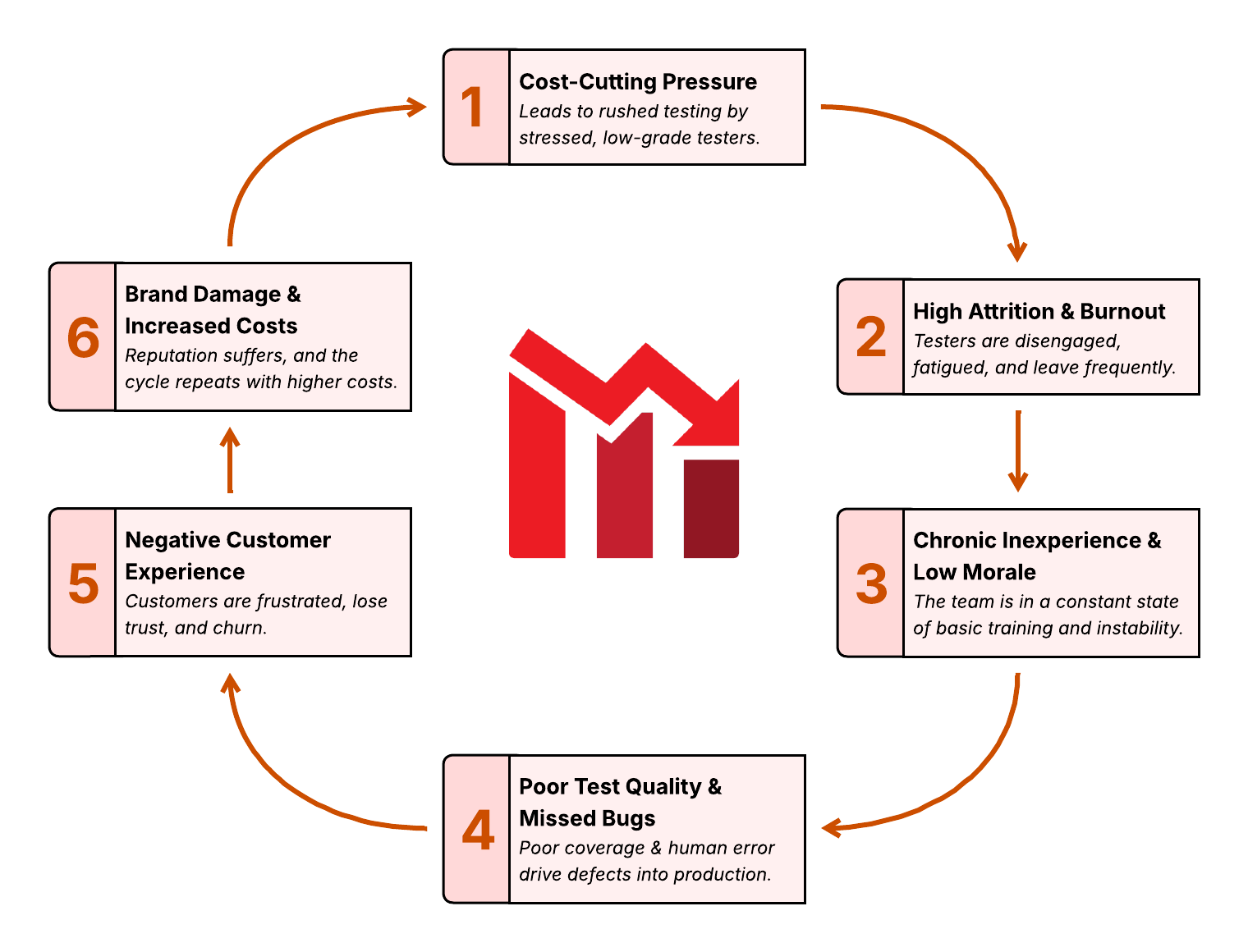

Racing To The Bottom: A Vicious Cycle

The conventional QA models—whether in-house or traditional outsourcing—create a self-reinforcing loop of declining quality and rising costs. This vicious cycle is driven by structural flaws in how testing is resourced and executed.

-

Cost-Cutting Pressure: Budget constraints lead to understaffed teams, rushed manual testing, and reliance on low-cost, inexperienced resources.

-

High Attrition & Burnout: Overworked and underpaid testers experience fatigue and disengagement, resulting in frequent turnover.

-

Chronic Inexperience & Low Morale: Constant onboarding erodes institutional knowledge, keeping the team in perpetual “basic training” mode.

-

Poor Test Quality & Missed Bugs: Inconsistent coverage and human errors allow defects to slip through to production, with inadequate documentation exacerbating issues.

-

Negative User Experience: Bugs and unclear docs frustrate users, leading to complaints, churn, and lost trust.

-

Brand Damage & Increased Costs: Emergency fixes, reputational harm, higher support due to doc gaps, and long-term expenses perpetuate the cycle.

John Galt Services: The Smart Alternative

It’s clear that delivering first-class quality and controlling costs requires a fundamentally different approach. John Galt Services (JGS) applies economic insights to address structural issues in standard QA approaches, enabling your QA operation to achieve its full potential by easing time, resource, and budget constraints.

We help eliminate the root causes of QA failures by providing remedies that address both talent strategy and process timing. The result is a solution that transforms your QA from a cost center into a source of competitive advantage.

It’s YOUR way… only better.

Read on to discover how JGS helps transform your existing QA operation from a cost center into a robust profit center.

Attracting Elite QA Talent

JGS tackles the quality issue at its source: the people doing the testing. We don’t hire average testers—we recruit elite professionals in Indonesia (based in tech hub Bali) and make it worth their while to build a career with us.

How?

-

Premium Compensation: JGS pays our QA engineers 2-3x the local market rate, an unheard-of figure in offshore staffing. This attracts a huge pool of applicants and lets us cherry-pick the best. It also means our testers treat their roles as long-term careers, not transient gigs. We have virtually zero attrition—a stark contrast to typical offshore teams—so our client teams enjoy continuity and deepening expertise over time.

-

Top 0.1% Selection: We evaluate well over 1,000 candidates for every hire, ensuring only very best QA talent ever makes it onto a client team. These are testers with exceptional analytical skills, often with programming or automation backgrounds, and a passion for breaking things in order to make them better.

By providing the best and brightest to integrate into your team, JGS ensures that your test cases are designed smartly and executed by people who will catch nuances that others miss. They aren’t just following a script: they’re thinking like users (and often like hackers) to anticipate corner cases.

In effect, we invert the typical offshoring bargain: instead of cut-rate juniors yielding cut-rate quality, we provide world-class QA talent at a cost still far below that of a local team, empowering your QA operation.

Trading Margin for Outcomes

How can we afford to hire such elite testers and still charge our customers significantly less than a U.S. salary?

JGS makes a deliberate trade-off: we operate on a lean margin to maximize results for clients. Instead of fat markups and layers of bureaucracy, we channel investment into our people and their performance.

This philosophy—trading vendor profit for client outcomes—is the core of our model. It means:

-

We don’t nickel-and-dime on quality. Tools, training, and ongoing skill development for our testers are provided as needed, at no extra cost to the client.

-

We incentivize our QA engineers directly. Their compensation and growth opportunities are tied to delivering excellence, not just billable hours.

-

We’re comfortable making less per contract because we know delighted clients stay and expand. The ROI of a truly defect-free release (and the goodwill it builds) far outweighs a few points of margin.

By reinvesting in talent and process, JGS avoids the false economies that plague other models. Our success is hitched to your success: finding every bug, tightening every feedback loop, and constantly improving test coverage.

We win when you ship with confidence!

Build Organizational Muscle, Not Vendor Dependency

As discussed in The Dependency Trap, a common concern with outsourcing QA is the loss of institutional knowledge.

Traditional outsourcing means your test plans, cases, and know-how live in the vendor’s tools and heads. If the vendor pulls out or fails, you’re left empty-handed. Companies often discover too late that they haven’t built any internal QA muscle: they outsourced not just the work, but the learning that comes with it.

The JGS mantra turns this paradigm upside-down: Your Team, Your Terms, Your Tools, Your Rules.

We embed our QA professionals directly into your team and workflows.36 They use your systems, whatever they are: JIRA for bug tracking, TestRail for test cases, or CI/CD pipelines for regression suites. You choose. All the test artifacts and improvements they create stay with you.

Over time, you’re not dependent on JGS at all. Instead, you’ve cultivated an extended QA capability in-house.

Crucially, if one of our team members does eventually move on, your testing structure remains intact and ready for a new expert to step in without disruption. We document and systematize our work according to your standards… and we pay performance bonuses when your JGS team members meet your standards better than your internal team does.

Other vendors may promise that knowledge is continuously transferred to your organization. In the JGS case—because we use your tools and only your tools—that knowledge never leaves your organization!

The goal is to leave your internal team stronger—with better processes and QA practices—than before we came in. In contrast to black-box vendor arrangements, JGS ensures you are building an asset, not a dependency.36

From Testers to Quality Champions

Because we hire top talent and integrate them into client teams, JGS-provided QA engineers do far more than tick off test scripts. They become proactive quality champions within your development organization.

This is a night-and-day difference from the “passive offshore tester” stereotype:37

-

Ownership Mindset: Our testers take responsibility for the quality of the product as if it were their own. They don’t just execute tests; they question assumptions, suggest edge cases, and even propose usability improvements when they see them. This mirrors the way empowered employees drive innovation and continuous improvement.38

-

Continuous Improvement: JGS installs a culture of never settling. If a defect got through, our team asks “how did we miss it and how do we improve our test suite to catch it next time?” They actively refine test cases and expand coverage so that quality increases sprint by sprint. Contrast this with traditional vendors who often stick rigidly to an out-of-date test plan—our approach is forward-looking and adaptive.

-

Collaboration with Devs: Because our QA sits within your workflow (often shifting their daytime hours early or late to have sufficient overlap with a US customer, allowing them to sync daily and also perform work while the US team is asleep), they form tight feedback loops with your developers. Bugs are reproduced and clarified in hours, not days of email tag. Developers trust our testers’ insights, and testers in turn understand the software design better. This synergy fosters a blameless, high-performance QA process rather than the adversarial, ticket-tossing dynamic too common with external teams.

The net effect is a team of QA professionals who function like an internal QA department that’s firing on all cylinders. They are invested in your success. Research shows that empowered, engaged employees are more effective problem-solvers and drive better outcomes—for instance, companies with highly engaged teams see 23% higher profitability and 10% higher customer loyalty on average.38 We’ve designed our model to capture that same power of engagement in an outsourced context.

Transparent, All-Inclusive Pricing

Despite providing elite talent and integrated service, JGS is able to offer simple, transparent pricing that undercuts typical local costs by a wide margin.

In fact, every full-time JGS QA resource is billed at a flat rate, regardless of their seniority or specialization:39

-

$1,920 per month ($23,040 annually) for an annual commitment, paid in advance.

-

$2,240 per month ($26,880 annually) for a quarterly commitment.

-

$2,560 per month ($30,720 annually) with monthly billing.

These rates are comprehensive and all-inclusive: there are no hidden fees for recruitment, management, or equipment.

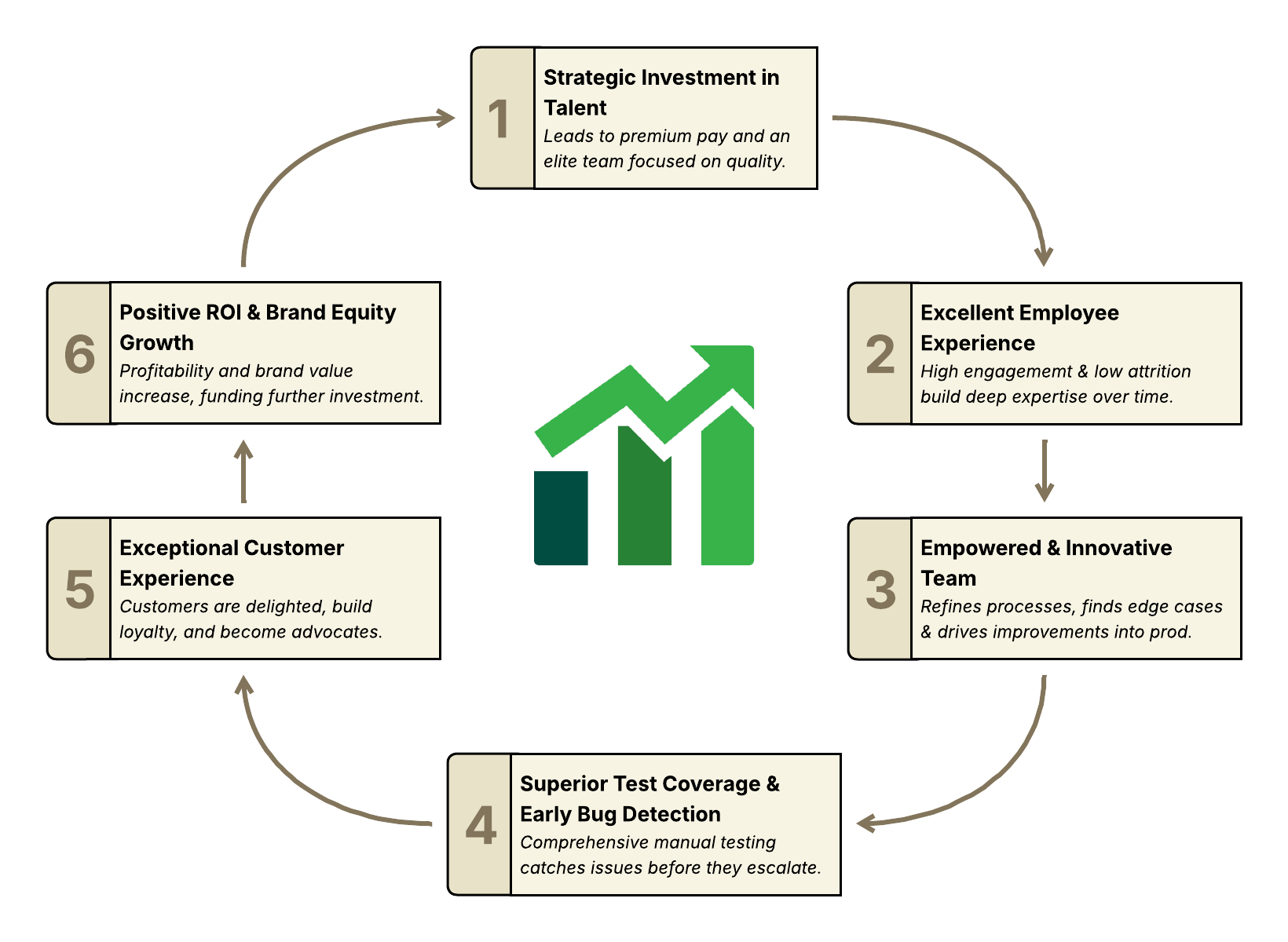

Winning By Design: The JGS Virtuous Cycle

The Vicious Cycle described above does not exist because organization managers don’t care enough or work hard enough.

This harmful dynamic is structural: once resource pricing and test coverage become the controlling strategic factors, the story can’t end any other way. Economics and biology demand it.

The on-purpose dynamics of the JGS approach are no less structural… but they lead to a very different outcome.

-

Strategic Investment in Talent: Premium pay and selective hiring attract elite QA professionals focused on quality.

-

Excellent Employee Experience: Engaged testers with low fatigue and attrition build deep expertise over time.

-

Empowered & Innovative Team: Proactive champions refine processes, uncover edge cases, and drive improvements, including robust documentation.

-

Superior Test Coverage & Early Bug Detection: Comprehensive manual testing catches issues before they escalate, generating high-quality docs.

-

Exceptional Product Quality: Reliable software with clear user and internal docs delights users, reduces churn, and enhances satisfaction.

-

Positive ROI & Brand Equity Growth: Lower defect costs, reduced support from better docs, and higher customer loyalty fuel reinvestment and growth.

The Bedrock of Quality: Mastering the Manual Testing Foundation

The solution to the Automation Paradox is not to abandon manual testing but to perfect it. The industry’s rush to automate has led many to forget a fundamental truth: you cannot automate what you do not understand.

Expert manual testing is not an outdated practice to be minimized; it is the essential strategic prerequisite for any successful, high-ROI automation program. It is the bedrock upon which all lasting software quality is built.

Building the Blueprint for Successful Automation

A successful automation program does not begin with writing code; it begins with deep, methodical, manual exploration. Manual testing should be viewed as the essential research and development (R&D) phase that precedes the capital expenditure of automation.

It is during this phase that QA engineers gain a profound understanding of the system’s behavior, discover the natural user flows, and uncover the critical edge cases that must be accounted for. This foundational knowledge is the raw material from which effective automation is built.

This manual-first approach allows for the validation and refinement of test cases before investing significant time and resources in scripting them. This dramatically reduces the risk of automating flawed, inefficient, or low-value test scenarios.40

Furthermore, it provides the necessary intelligence to prioritize automation efforts effectively. Not all tests provide equal value when automated; manual testing helps identify the ideal candidates—stable, high-value, repetitive tasks—that will deliver the greatest return on the automation investment.40

By first establishing a stable baseline of functionality through manual testing, an organization ensures it is not attempting to automate a constantly moving target.40

This reframes the entire financial calculation of QA. The high upfront cost of automation is a known risk, and failed automation projects represent a significant waste of capital.16 By executing expert manual testing first, an organization can de-risk this much larger investment.

A dollar spent on foundational manual testing to correctly identify and specify an automation candidate can save five, ten, or twenty dollars in wasted automation development and maintenance down the line.

In this light, the cost of expert manual testing is not a simple operational expense. It is a form of insurance, a due diligence process performed on the much larger automation budget. This elevates the conversation from “How much do testers cost?” to “How do we best allocate our total quality budget to maximize ROI and minimize risk?”

The Manual-Test-to-Documentation Pipeline

An effective manual testing process naturally lends itself to creating comprehensive documentation. Well-crafted test scripts—detailing preconditions, steps, expected results, and edge cases—serve as a blueprint for both user-facing and internal docs.

For user documentation: UX-focused scripts can be repurposed into step-by-step guides, tutorials, and FAQs, ensuring accuracy and completeness.

For internal documentation: Test cases capture system behaviors, dependencies, and rationale, which can be transformed into code comments, architecture diagrams, and process wikis.

This pipeline involves:

-

Scripting with Docs in Mind: Write tests in clear, narrative form suitable for adaptation.

-

Tooling Integration: Use platforms like TestRail or Confluence to link tests to docs.

-

Review and Refinement: Incorporate doc generation into test reviews.

-

Automation for Maintenance: Once mature, automate doc updates from test changes.

By institutionalizing this pipeline, organizations close the documentation gap, reducing maintenance costs (60-80% of lifecycle)22 and ticket times (37% increase without docs).22

From Defect Detection to Business Intelligence

The value of expert manual testing extends far beyond creating a blueprint for automation. Its unique advantage lies in the application of human judgment, intuition, and experience: qualities that no automated script can replicate.41

A skilled human tester can assess subjective but critical factors like the logic of a workflow, the intuitiveness of the user experience (UX), and the overall usability of a feature in a way that goes far beyond a simple pass/fail check.40 This capability is most evident in exploratory testing, where a tester’s creativity and experience allow them to deviate from predefined scripts to uncover unexpected bugs and design flaws that were never considered in the original requirements.41

Manual testing is also inherently superior for validating the visual aspects of a user interface (UI), catching the misaligned elements, font inconsistencies, and layout glitches that automated visual regression tools are notoriously unreliable at flagging.42

When this human-centric feedback is captured and delivered in a structured way, the manual testing process transforms from a downstream bug hunt into an upstream source of business intelligence. This feedback, delivered early and clearly to the development and product teams, can inform design decisions and prevent the creation of features that are functionally correct but experientially flawed.

This creates a tight, real-time feedback loop that makes the entire development process not just safer, but smarter. It reduces wasted development cycles by preventing teams from building, testing, and then rebuilding a feature that is unusable. This positions the QA team not as a gatekeeper, but as an integrated, value-adding partner to the product and development organizations. They are no longer just finding bugs; they are helping to build a better product, faster.

Furthermore, properly written manual test scripts, especially those focused on UX testing, are just a bit of polish away from user documentation. These scripts detail step-by-step interactions with the software, which can be directly adapted into user guides, tutorials, and help documentation. This synergy addresses a prevalent issue in software development: inadequate user documentation.

Poor or insufficient documentation is a common problem, affecting a significant portion of software projects. According to studies, inadequate documentation can lead to increased development time by up to 18%, higher support costs, and decreased productivity.21 It frustrates users, resulting in more support tickets and potential customer churn. The financial impact is substantial, with poor documentation contributing to hidden costs that can amount to millions in lost efficiency and rework.22

When manual testing is executed well, it not only ensures quality but also fills this documentation gap, providing dual value. Conversely, when manual testing is neglected or poorly performed, this opportunity is lost, exacerbating the costs associated with unclear user instructions.

Leveraging the manual-test-to-documentation pipeline extends this to internal docs, further reducing maintenance burdens and team friction.

Intelligent Automation: The Right Way

Because our team is composed of high-caliber professionals (many with coding skills), JGS can handle building and maintaining sophisticated test automation in-house… meaning YOUR house.

We don’t need to bring in separate consultants to write scripts: your embedded QA can evolve into an automation engineer when the time is right. This saves cost and ensures the same domain experts who learned the product manually are writing the scripts to test it. They know where the bodies are buried.

The bottom line on automation: tools don’t replace strategy. JGS will absolutely leverage automation—but only in service of a well-thought-out QA strategy. As one set of best practices says, “Don’t expect to automate everything. Some tasks will always require manual testing.”43 We automate what makes sense, and we do it exceptionally well, after we’ve proven manually that it’s worth automating. The result is a lean, effective test suite that accelerates release cycles without sacrificing quality.44

Premature automation skips the manual phase, failing to produce the rich test scripts that form the foundation for comprehensive user and internal documentation, thus perpetuating the documentation deficit and its associated costs.

A Phased Solution: From Manual Mastery to Strategic Automation

With JGS resources embedded in your team, you gain the capacity to implement a structured, two-phase approach to QA that addresses immediate manual testing pain points while paving the way for long-term automation gains.

This phased progression—from stabilization to optimization—becomes feasible because JGS handles the heavy lifting of labor-intensive tasks, freeing up your internal team and budget to focus on strategy, oversight, and higher-value activities. You’re always in the driver’s seat, directing the process while leveraging our elite talent to elevate your entire testing operation.

When QA costs plummet and QA coverage accelerates, you don’t just build things differently. You build different things.

Phase 1: Manual Foundation (Months 1-3)

- Deep dive into application and requirements.

- Embed JGS team into client tools (JIRA, Slack, etc.).

- Develop comprehensive, risk-based test plans.

- Execute manual regression, exploratory, and new feature testing.

- Detailed, actionable bug reports with logs and recordings.

- Daily/weekly test execution reports.

- A prioritized backlog of automation candidates.

- Generate initial user and internal documentation from test scripts.

Business Outcomes:

- Immediate relief for your in-house team.

- Elimination of the “Fatigue Tax” and improved bug detection.

- Clear visibility into product quality (“Coverage Mirage” solved).

- A stable foundation for future development, automation, and documentation.

Phase 2: Strategic Automation (Months 4+)

- Analyze manual test results to identify high-ROI automation candidates.

- Develop and script automated tests for stable, critical-path features.

- Integrate automated tests into client’s CI/CD pipeline.

- Reports integrated into the CI/CD pipeline.

- A documented automation framework.

- Measurable reduction in regression testing time.

- Refine and expand documentation based on automated insights.

Business Outcomes:

- Drastically accelerated release cycles.

- Increased developer productivity (faster feedback).

- Long-term reduction in QA operational costs.

- A scalable, compounding quality asset for the organization, including living documentation.

This integrated, two-phase approach creates a flywheel of compounding knowledge and value. In a traditional model, the deep product knowledge gained by a manual testing team is often lost during a handoff to a separate automation team. With JGS resources augmenting your operation, this critical knowledge is retained within your ecosystem.

When you decide to automate, your extended team isn’t starting from scratch. They already know which UI elements are brittle, which user flows are most critical, and where the hidden complexities lie. This retained context allows you to build smarter, more resilient, and more valuable automation.

This is the ultimate fulfillment of building your organizational muscle: the knowledge gained is not just stored in documents; it is embedded within your integrated team and permanently codified into a lasting automation asset that improves your quality system over the long term.

The Bottom Line: Quality Assurance as a Profit Center

Traditional QA approaches have treated testing as a necessary cost—something to be squeezed in budget and minimized where possible. JGS flips that script. By providing elite talent that integrates into your processes, and applying economic insights to ease constraints, we enable you to turn QA into a source of value creation.

The difference is night and day:

-

Higher Reliability: With comprehensive, methodical testing, your product experiences fewer incidents in production. That means less firefighting, fewer urgent patch releases, and far lower risk of catastrophic failures. Considering software defects cost businesses hundreds of billions annually in fixes and downtime,1 preventing those defects is a direct boost to the bottom line.

-

Better Customer Experience: Every release that goes out bug-free translates into smoother customer interactions. Instead of frustrating users with glitches, you delight them with quality. This pays dividends in customer retention and brand reputation. Remember that companies with superior customer experience financially outperform those with poor CX—$3.8 trillion in global sales are at risk due to bad customer service and experiences.45 Delivering quality is delivering great CX, enhanced by clear documentation.

-

Faster Time-to-Market (with Confidence): Paradoxically, doing QA right can speed you up in the long run. When JGS professionals are finding and helping fix issues early, your team avoids late-stage scrambles. Releases can ship on schedule—or even faster—because you aren’t going back to repair broken things. Over time, the steady rhythm of well-tested releases builds a reputation of reliability that lets you be bolder in the market, supported by robust docs reducing post-release support.

-

ROI of Engaged Teams: The JGS model demonstrates what many forward-thinking leaders know: investing in people and process yields a high return. Gallup research shows highly engaged organizations achieve significantly better business outcomes—in profitability, customer loyalty, and even stock performance.38 By engaging top-notch QA engineers and empowering them, we create a virtuous cycle where quality feeds customer happiness, which feeds revenue and growth.

-

Comprehensive Documentation: By leveraging manual testing to generate high-quality user and internal documentation, reducing support costs, maintenance burdens, and knowledge gaps.

-

Built-in Resilience: Avoiding vendor dependency by building internal QA capabilities and organizational muscle you can measure on your balance sheet.

In summary, the old ways of handling QA—whether under-investing with a skeleton crew or offloading to the lowest bidder—are penny wise, pound foolish. They save a few dollars today only to burn far more in hidden costs and lost opportunities.

John Galt Services offers a smarter alternative: transform QA from a cost center into a profit center.

We provide the skilled people and proven approach to not only cut your testing costs, but dramatically raise your quality bar. The outcome is software that is more stable, customers who are more satisfied, and an operation that turns quality into a competitive advantage, with comprehensive documentation as a key enabler.

Why settle for the QA status quo, with all its headaches and shortcomings, when you could have a high-performance solution that pays for itself many times over?

With JGS backing up your QA team, you can deliver better software, faster and cheaper—without ever having to worry that a critical bug will be your next 3 AM crisis.

It’s time to rethink testing and embrace a 21st-century model designed for excellence. Your customers (and your CFO) will thank you for it!

Footnotes

-

Cost of Poor Software Quality in the U.S.: A 2022 Report - Our 2022 update report estimates that the cost of poor software quality in the US has grown to at least $2.41 trillion, but not in similar proportions as seen in previous years. ↩ ↩2 ↩3

-

‘Lost sales’ due to poor customer experiences could hit $1.4T in U.S. - A new global study by Qualtrics XM Institute reveals that poor customer experiences could lead to $1.4 trillion in lost sales in the U.S. by 2025, with 53% of consumers willing to cut spending after a bad experience, particularly affecting sectors like fast food and online retail. ↩ ↩2

-

Salary: Qa Engineer in United States 2025 - The average salary for a QA Engineer is $100,537 per year or $48 per hour in United States, which is in line with the national average. ↩

-

Qa Software Tester Salary: Hourly Rate July 2025 USA - Current average annual salary: $83,472. Current hourly rate: $40. Overall salary range: $23,500 - $149,000. Majority wage range: $28.12 - $49.28 per hour. ↩

-

True Cost of Software Defects: Customer Churn - Research by Forbes reveals acquiring a new customer is 4-5 times more expensive than retaining an existing one. So, it’s just as important to focus on customer retention as it is on acquisition. ↩ ↩2

-

Reducing Tester Fatigue in Manual Software Testing - Manual software testers often experience fatigue due to repetitive tasks, tight deadlines, and mental overload, but strategies such as rotating test types, optimizing test design, leveraging test management tools like TestMonitor, and encouraging breaks can help sustain focus and reduce errors. ↩ ↩2

-

Manual Testing Issues & Effective Solutions - Manual testing faces challenges such as time constraints, repetitive tasks, human errors, communication gaps, lack of test coverage, changing requirements, and limited resources, with solutions including prioritizing testing, using automation tools, reviewing test cases, maintaining clear documentation, and utilizing open-source tools to enhance efficiency and effectiveness. ↩ ↩2

-

Common Challenges in Manual Software Testing and How to Overcome Them - Manual software testing faces challenges such as time limitations, repetitive tasks, communication issues, and technical difficulties, which can be addressed through strategies like prioritizing testing scenarios, automating repetitive tasks, enhancing collaboration, and gradually mastering testing tools. ↩ ↩2

-

Common Challenges in Manual Testing and How to Overcome Them - Manual testing, essential for software quality, involves human testers executing test cases to identify defects, but faces challenges like human errors, diverse test environments, extensive documentation, and project scalability, which can be mitigated using structured systems, emulators, standardized practices, and contingency planning. ↩

-

Top 7 Challenges in Manual Testing - Manual testing, while traditionally relied upon for validating software quality, faces significant challenges in modern Agile and Continuous Delivery environments, including time consumption, high resource demands, human errors, and difficulties in coping with frequent updates and ensuring comprehensive test coverage. Test automation, particularly with tools like Testsigma, is presented as a more efficient alternative, addressing these issues by reducing errors, saving time, and facilitating continuous delivery without requiring extensive programming knowledge. ↩

-

[PDF] Impact of Overtime and Stress on Software Quality - In this paper we hypothesize a direct relationship between stress and quality of software. The hypothesis is based on data (Overtime, actual estimated time). The study finds that overtime of 150-200 hours correlates with higher defect rates. ↩

-

Modeling Software Reliability with Learning and Fatigue - This page presents a study on modeling software reliability by integrating the effects of learning and fatigue in software testers, proposing two new software reliability growth models (SRGMs) using the tangent hyperbolic and exponential functions to represent learning, and an exponential decay function to model fatigue, with experimental results showing the model incorporating tangent hyperbolic learning with fatigue outperforming existing models in fit, predictive power, and accuracy. ↩

-

Tester’s Diary: The QA Burnout - Tester burnout in the QA industry is a significant issue causing mental and physical exhaustion among testers due to factors like excessive workload, lack of control, inadequate rewards, poor work environment, unfair treatment, and value misalignment, leading to high turnover rates and increased recruitment costs. This creates a vicious cycle of hiring, onboarding, burning out, and replacing talent, which constantly drains team morale, institutional knowledge, and productivity. ↩

-

How to Improve Test Coverage without Slowing Down Development? - To improve test coverage without slowing down development, focus on automating testing to increase efficiency and detect bugs early, integrating AI for faster test case generation and anomaly detection, and prioritizing test cases based on business impact and customer usage to ensure critical areas are tested first. Additionally, involve all roles in testing, use parallel testing to run multiple test cases simultaneously, and develop a strategic plan with clear goals to optimize testing efforts while maintaining speed. ↩

-

How to ensure Maximum Test Coverage? - Test coverage involves monitoring the extent of tests executed on software, covering various types such as product, risk, requirements, code, platform/device coverage, and user scenario coverage, data, each focusing on different aspects like functionality, potential risks, and real-world user interactions, to ensure comprehensive testing and quality assurance. To maximize test coverage, strategies include creating a test coverage matrix, expanding code coverage, increasing test automation with tools like BrowserStack’s cloud Selenium grid, and maximizing device coverage across various environments. ↩

-

Efficient QA Cost Management: Manual vs. Automated Testing - In this blog post, we will explore the factors that affect the costs of manual and Automated Testing and how making the right choice can optimize your QA cost. ↩ ↩2

-

Manual Testing vs Automation Testing - The page compares manual testing and automation testing, highlighting their key differences in speed, accuracy, cost-efficiency, flexibility, reliability, and suitability for user interface testing, and discusses the pros, cons, and appropriate use cases for each, concluding that a hybrid approach often works best. It also provides guidance on how to integrate both methods effectively and switch from manual to automation testing, emphasizing that neither can fully replace the other. ↩

-

Manual Testing vs Automated Testing: Key Differences - Manual testing involves human testers interacting directly with software to identify issues, offering flexibility and intuition, while automated testing uses computer programs to execute predefined scripts, providing efficiency and precision for repetitive tasks. The choice between them depends on factors like cost, timeline, team skills, and project requirements, with a hybrid approach often recommended for comprehensive coverage. ↩

-

16 Best Test Automation Practices to Follow in 2025 - Identify High-Value Tests: Automate tests that are used frequently, have a high impact, and are stable, such as regression and smoke tests. Avoid automating tests that are volatile or require human intuition. ↩

-

Manual vs Automation Testing: Differences, Challenges, & Tips - Coordinating automated and manual testing is essential for enhancing testing efficiency, as both approaches complement each other by covering different aspects of software quality, with automated tests ensuring speed and consistency, and manual tests providing human perspective for unscripted scenarios and user experience evaluation. Effective management of both testing types requires centralized coordination, shared goals, and consistent naming conventions to ensure visibility and communication across teams, addressing challenges like differing test scales and result timelines. ↩

-

The Hidden Cost of Poor Documentation in Software Development - A study by McKinsey found that companies with poor documentation take 18% longer to release new features compared to industry peers. Higher Development Costs. ↩ ↩2

-

The Business Impact of Poor Tech Documentation: A Global Analysis - Poor documentation creates a cascade of consequences that ripple throughout organizations: decreased productivity, increased support costs, implementation delays, and frustrated users. The IEEE Systems Journal reports that maintenance typically consumes 60-80% of software lifecycle costs, with poor documentation significantly increasing this burden. Zendesk’s benchmark data reveals that organizations with poor documentation experience an average 37% increase in ticket resolution time. ↩ ↩2 ↩3 ↩4 ↩5 ↩6

-

Calculating the ROI of documentation and knowledge bases - Customer churn - Perhaps most costly of all, poor documentation contributes directly to customer attrition. When customers cannot find the information they need, frustration builds, leading to canceled subscriptions and lost revenue. ↩

-

The Hidden Costs of Inadequate Engineering Documentation - Poor documentation breeds confusion and silos. Engineers, IT admins, and managers may all have different understandings, leading to miscommunication and inefficient workflows. ↩

-

The Hidden Dangers of Incorrect Documentation in Software Development - Incorrect documentation creates a false sense of security, misleading teams and exacerbating technical debt. ↩

-

Poor and Insufficient Documentation in Software Development - Poor documentation often forces developers to spend valuable time searching for essential information, hampering their productivity. ↩

-

Four hidden costs of offshoring software development - 1. Communication barriers inhibit innovation and collaboration · 2. Offshore teams take longer to ramp up · 3. Rework of low-quality offshore software development. ↩

-

7 Hidden Costs of Offshore Software Development - Think offshore coding is cheaper? Discover the seven hidden costs that can turn a $25/hour developer into a six-figure overrun—and learn how to avoid them. ↩

-

IT-BPO Talent Crisis - The IT-BPO industry is facing a significant talent crisis, with high attrition rates (30-50%) driven by demand surges and employee value proposition challenges. Service providers must enhance employee-centric cultures to manage this crisis. ↩

-

What is Cost of Quality (COQ)? - Cost of Quality is a method that allows organizations to determine the costs associated with producing and maintaining quality products. ↩

-

Software Testing Outsourcing in 2025: All You Need To Know - The content discusses risks in outsourcing QA, including loss of internal expertise (leading to knowledge gaps and reduced in-house skills) and vendor reliance (causing inconsistent delivery, control challenges, data breaches, and dependency on vendor capabilities). Mitigation strategies include strong NDAs, proper onboarding, tools like GitHub for version control, phased engagements, risk registers, and regular reviews. ↩ ↩2

-

A Comprehensive Guide to QA Outsourcing Services - One key risk is vendor dependence, where over-reliance on a single outsourcing provider can be risky, as the vendor’s operational challenges, such as financial or operational issues, can negatively affect the project’s success. ↩

-

Outsourced Software Testing: Best Practices For Choosing a Testing Partner - Relying heavily on an external provider can create dependency, which may be risky if the provider faces resource constraints or financial instability. ↩

-

Top Risks of Outsourcing in 2025 and How to Avoid Them - Risk of becoming dependent on a single vendor’s proprietary tools, processes, or expertise, which can make switching providers expensive or technically challenging, open up to unexpected price hikes, create vulnerabilities if the vendor’s business suffers, and limit the ability to scale or adapt quickly. ↩

-

Is QA Outsourcing Worth It? Exploring the Costs and Benefits - Communication challenges, such as language barriers and time zone differences, can lead to misunderstandings and gaps in knowledge transfer. While QA outsourcing offers initial cost savings by avoiding infrastructure and training expenses, long-term costs may include ongoing vendor fees and potential expenses related to addressing quality control concerns or security breaches. The content highlights potential risks such as lack of control over the QA process and dependency on external vendors, which could impact the overall cost-benefit analysis. ↩ ↩2

-

Build Organizational Muscle, Not Vendor Dependency - John Galt Services (JGS) promotes building organizational muscle by integrating remote professionals into a client’s existing team, using their systems and procedures, ensuring corporate knowledge remains an internal asset and fostering long-term resilience, unlike traditional outsourcing which creates vendor dependency by building external teams. This approach, exemplified by JGS’s “follow-the-sun” model with elite talent in Bali, Indonesia, aims to enhance organizational capability without the risks associated with vendor reliance. ↩ ↩2

-

From Passive Workers to Empowered Innovators - John Galt Services (JGS) transforms the traditional offshore staffing model by paying premium rates to attract elite talent in Bali, aligning their daytime with U.S. nighttime to eliminate fatigue, and fostering an environment where employees are empowered innovators rather than passive workers, driving superior service and continuous improvement for clients. This approach contrasts with traditional models that often result in disengaged workers, high attrition, and poor service quality, by instead building organizational resilience and enhancing customer experience through proactive problem-solving and ownership. ↩

-

The $8.8 Trillion Workplace Problem - At least 50% of the U.S. workforce are “quiet quitters” who do the minimum required and are psychologically detached from their jobs, with engagement dropping and disengagement rising, particularly among younger workers under 35, amid a growing disconnect from employers since the pandemic. Managers are crucial in addressing this issue by having regular meaningful conversations with employees to reduce disengagement and burnout, and by creating accountability for performance and connection to the organization’s purpose. ↩ ↩2 ↩3

-

Senior Level Talent: Entry-Level Cost - Full-time resources: Annual commitment: $1,920 per month ($12 per hour); Quarterly: $2,240 ($14 per hour); Monthly: $2,560 ($16 per hour). All-inclusive: equipment, systems, training, management, and talent. No hidden fees. ↩

-

Is Knowing Manual Testing Important Before Learning … - While knowing manual testing is not an absolute requirement for learning test automation, it undoubtedly provides a strong foundation. ↩ ↩2 ↩3 ↩4

-

Advantages and Disadvantages of Manual Testing - Manual testing involves human testers executing test cases to evaluate software functionality and quality, particularly effective for integration, system, and user acceptance testing, offering advantages like ensuring user experience and meeting certification standards. However, it has disadvantages such as being time-consuming and less suitable for regression or performance testing, requiring detailed steps for planning and execution to identify defects effectively. ↩ ↩2

-

Advantages of Manual Testing - Manual testing offers distinct advantages such as visual accuracy, cognitive insight, and adaptability to changes, making it indispensable for exploratory, UI/UX, and human-centric validation, particularly in early development stages and short-lifecycle projects, despite its limitations like being time-consuming and less scalable compared to automation. BrowserStack enhances manual testing with real-device access, instant scalability, and powerful debugging tools, allowing testers to conduct robust QA without setup overhead, while also supporting automation for a hybrid testing strategy. ↩

-

5 rules for successful test automation - Successful test automation requires setting realistic expectations by understanding both the capabilities and limitations of the software, treating it as a development project that needs proper planning and skilled personnel, and designing applications with testability in mind to ensure effective testing processes. ↩

-

How to Write Test Cases for Automation Testing? - To write effective test cases for automation testing, start by deciding what to automate based on impact, then create detailed test cases including components like Test Case ID, Description, Preconditions, Test Steps, Test Data, Expected and Actual Results, Postconditions, Pass/Fail Criteria, and Comments, as exemplified by a test case for the Etsy login popup. You can then turn these test cases into test scripts using either a test automation framework with programming or a tool that allows building test cases with minimal coding. ↩

-

$3.8 Trillion of Global Sales are at Risk Due to Bad Customer Experiences in 2025 - Businesses around the world risk $3.8 trillion in sales due to bad customer experiences – a figure $119 billion higher than last year. ↩